As museum teams revisit their interpretive strategy in light of COVID-19, many are starting content development from scratch and asking their teams foundational questions like:

- What content do visitors want?

- What format do they want this content?

- What access points will they engage with?

We recommend starting with a single tour in your mobile guide, a single interactive screen, or other isolated project so that your team can keep your test focused and meaningful as you collect feedback and iterate based on your learnings.

What is A/B testing exactly?

A/B testing, also called split testing, is a research method for testing two versions of something to see which performs better with users. The most important thing to know about A/B testing is to keep it simple. That is, have clear goals for each test, test one variable at a time, and give the test ample time in front of your visitors so you can be sure that you’re collecting meaningful data.

Identify Your Goal & Define Your Test

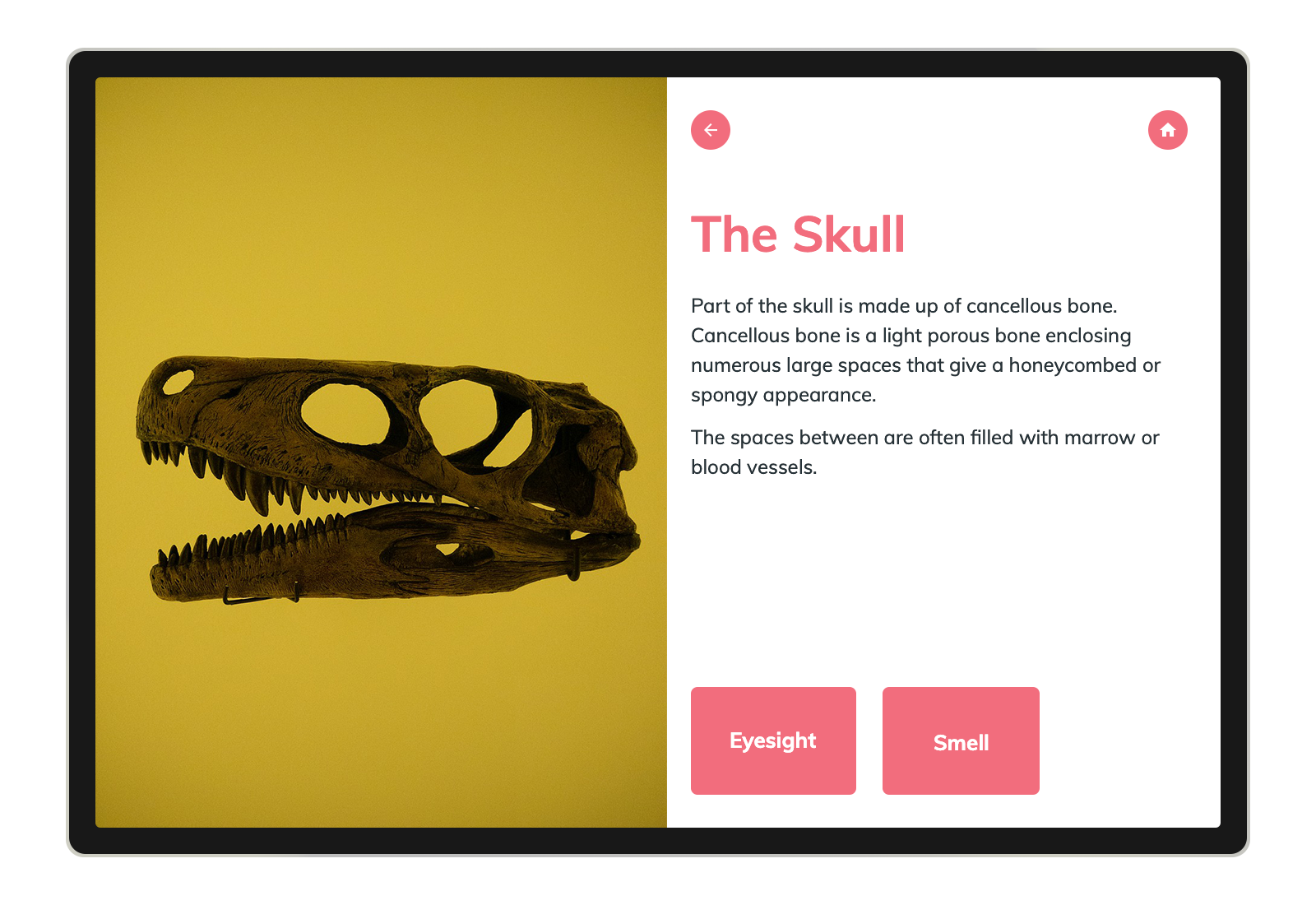

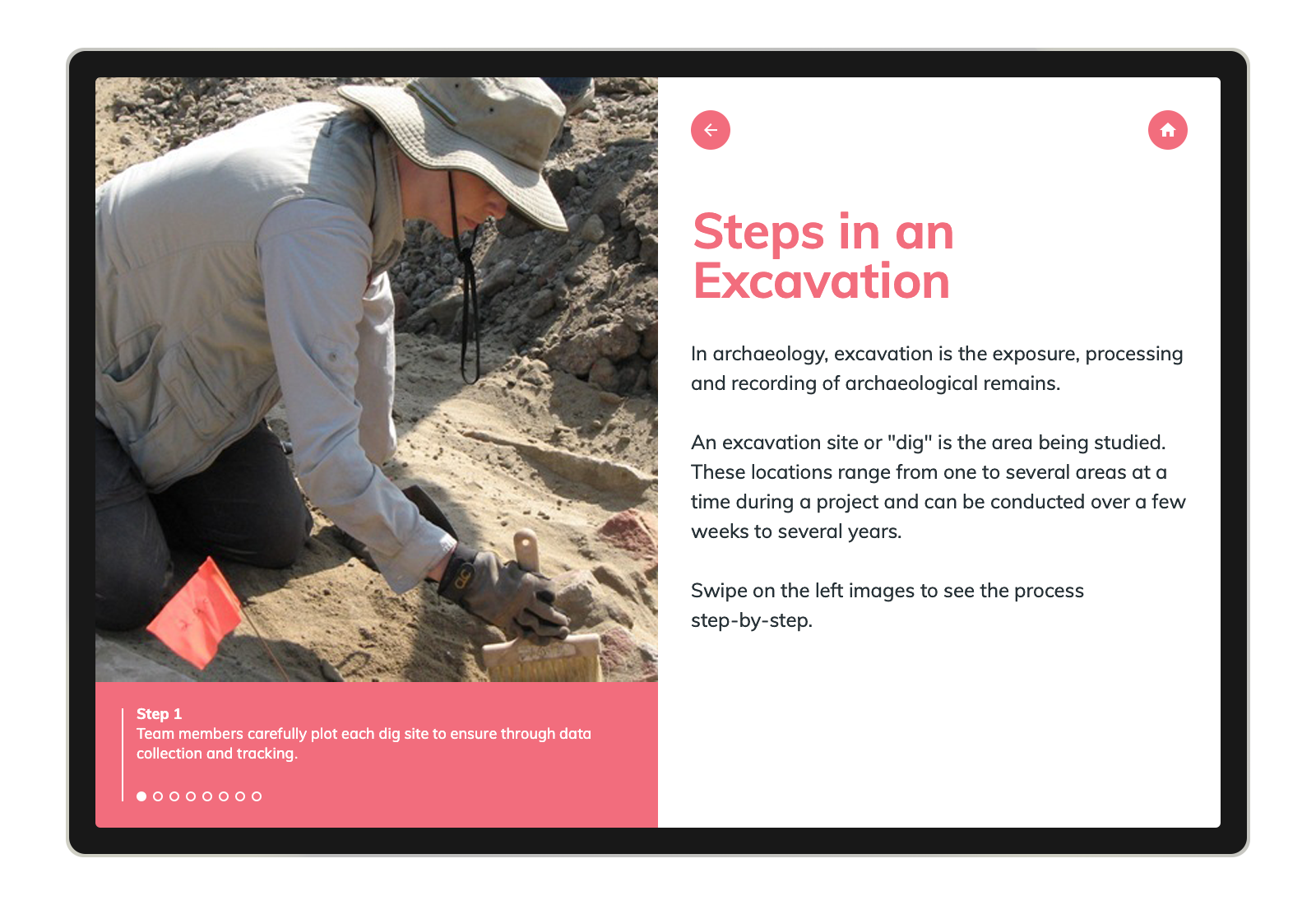

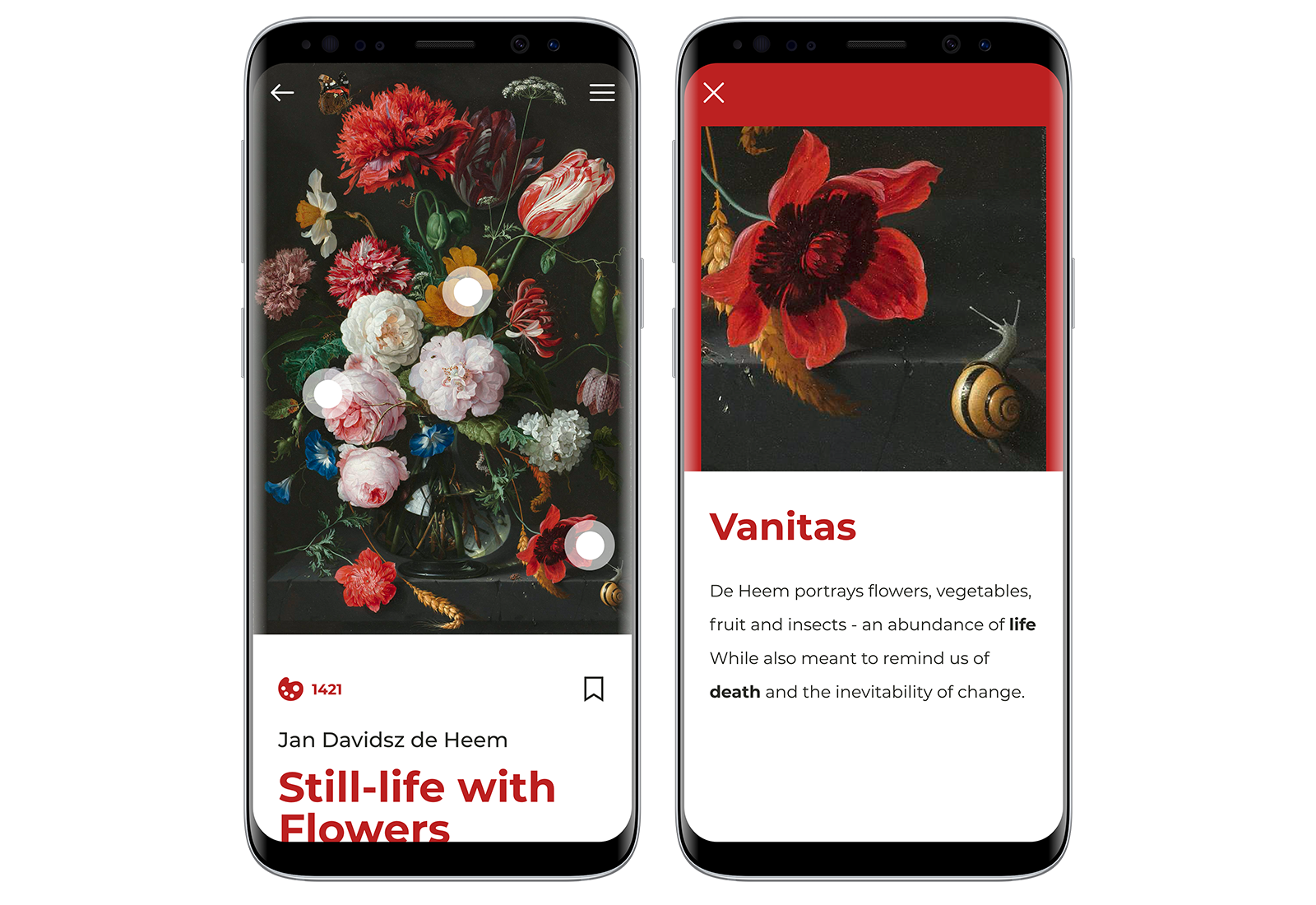

This is likely the most complex part of your A/B testing. There’s often many competing priorities among a variety of stakeholders, so defining a testing goal will help focus and define your test. What you test will depend on the channel, the audience, and the content available to you. Many of our museum teams are testing video content against image and text to determine what leads to longer engagement while others are exploring conversion rates for when resources are linked to externally (an outside website) vs. adding an additional layer of content housed within the application. Key elements to test include content length, content type, and engagement time.

Below, a natural history museum shows how to test content on their in-gallery touchscreens. In this scenario, they are testing engagement time to better understand how much time visitors will invest in the touchscreen learning experience – will visitors click the learn more buttons or no? What is the overall engagement time and how do the number of pages viewed differ between the two experiences?